Part 7: How to run inference on Microsoft Phi-2 Language Model on Google Colab

Microsoft released Phi-2 Language model on 12 December 2023. Everyone is trying to train the largest language model. But one of the biggest use cases of language models would be as an assistant on mobiles. In December Google launched the Gemini Nano model which will run on Pixel 8 pro phones. Only a few days later Microsoft released the Phi-2 language model which is similar in size as the Gemini nano model but has better performance.

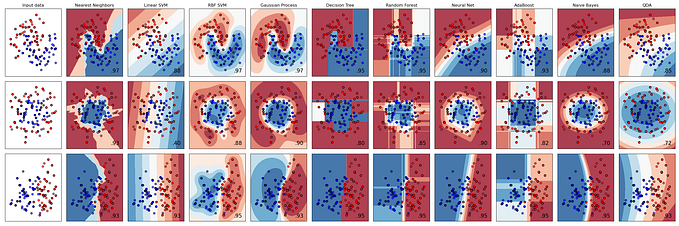

Comparison between Phi-2 model and Gemini nano model

Not only Phi-2 language model holds its own against Gemini nano. It performs not much worse compared to much larger open-source language models

Comparison between Phi-2 model and Larger open-source models

Significantly for coding, Phi-2 language model is better than even Llama-2 70 B parameter language model.

Inference on Phi-2 language model

To run inference on Phi-2 language model, follow the following steps. You can run the code in Google Colab. You can run it also on your local laptop or PC but you have to install an appropriate CUDA driver for your GPU.

1 First open a new colab notebook. Choose runtime as GPU.

2 Install einops library

pip install einops3 Import the required libraries

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer4 Instantiate the model

torch.set_default_device("cuda")

model = AutoModelForCausalLM.from_pretrained("microsoft/phi-2", torch_dtype="auto", trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained("microsoft/phi-2", trust_remote_code=True)This will download the phi-2 language model.

5. Define the following function for querying the model

def response(query):

inputs = tokenizer(query, return_tensors="pt", return_attention_mask=False)

outputs = model.generate(**inputs, max_length=200)

text = tokenizer.batch_decode(outputs)[0]

return textI tried a couple of queries on the model.

The performance of phi-2 model is not anywhere close to the free version of Chatgpt. But if you want to run an LLM locally on a mobile or an inexpensive PC or laptop then you cannot find anything better than Phi-2 language model.

Source